Google has launched a new model in the Gemma line of open models called Gemma 3 270M. Having only 270 million parameters, this small artificial intelligence model is designed to be highly efficient and can be deployed on low-power devices, including smartphones, with no access to the internet. The purpose of this development is to offer the developers a dedicated tool to create fast and affordable AI applications.

Designed for Efficiency and Specialization

The Gemma 3 270M model is constructed with the philosophy of having the right tool in the job, which is more about efficiency than sheer power. Its 170 million embedding parameters and 100 million parameters of the transformer block can be used effectively to work with specific and unusual tokens. The gemma 3 270M can be a great starting point to train on a particular task and language. The strength of the gemma 3 270M will be unleashed when it is tailored to execute well-defined tasks like text classification, data extraction, sentiment analysis and creative writing. With the optimization of the compact model, developers can come up with lean, fast, and much cheaper production systems to run.

Performance and Power Consumption

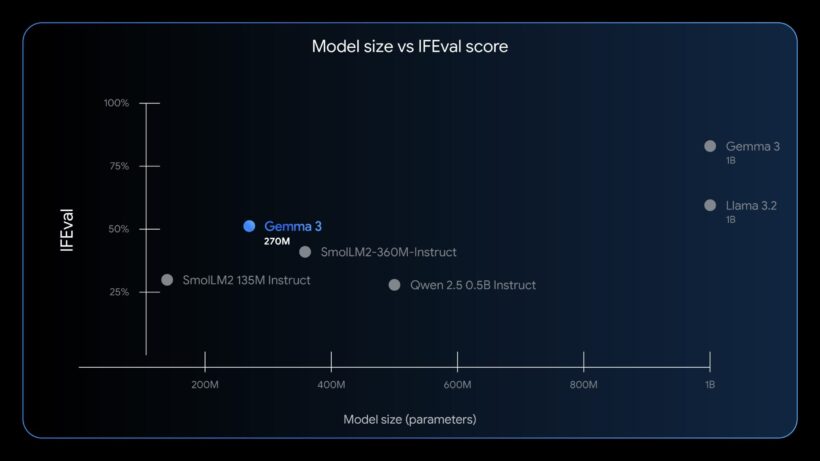

Although it is small in size, the Gemma 3 270M offers robust performance especially in instruction-following works. An instruction-tuned variant of the model achieved 51.2% on the IFEval benchmark, which tests an AI on its capacity to follow instructions and outperformed similarly sized models. One of the most important characteristics of the new model is that it consumes a low amount of power. Google internal tests on a Pixel 9 Pro smartphone demonstrated that the INT4-quantized version of the model consumed a mere 0.75% of the phone battery in 25 conversations. This response sensitivity makes it viable in privacy-sensitive and offline usage-case scenarios.

Availability and Applications

Google has published the Gemma 3 270M on multiple platforms, such as Hugging Face, Docker, Kaggle, Ollama and LM Studio. The model can be downloaded as both pretrained and instruction-tuned versions. The capabilities of the model make it possible to develop various creative and practical applications that can work offline. One of the examples demonstrated is a Bedtime Story Generator web app, which can generate original stories based on prompts that a user enters. This serves to demonstrate the potential of the model to power new forms of on-device AI applications that need not connect to the internet.